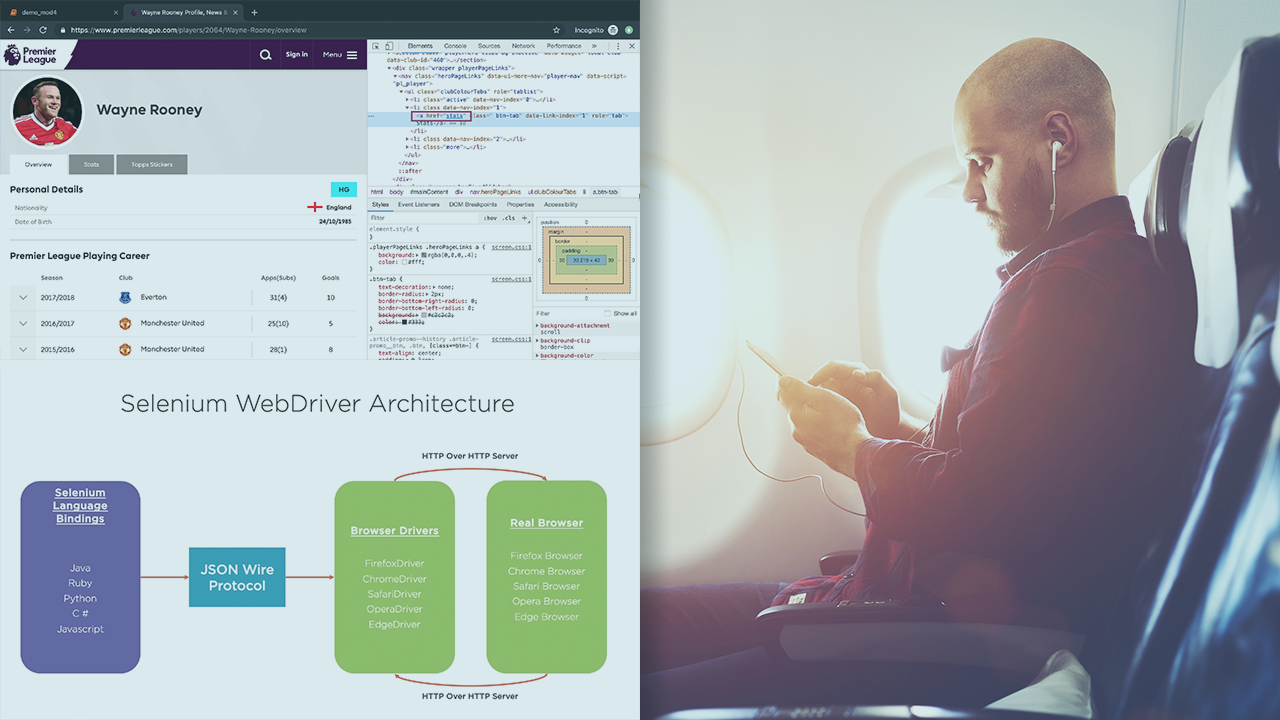

With the help of Selenium, we can also scrap the data from the webpages. Here, In this article, we are going to discuss how to scrap multiple pages using selenium. There can be many ways for scraping the data from webpages, we will discuss one of them. Looping over the page number is the most simple way for scraping the data. Selenium is a framework for web testing that allows simulating various browsers and was initially made for testing front-end components and websites. As you can probably guess, whatever one would like to test, another would like to scrape. And in the case of Selenium, this is a perfect library for scraping. No Comments on JAVA, Selenium, headless Chrome, JSoup to scrape data of the web In this post we share with you how to perform web scraping of a JS-rendered website. The tools as seen in the header are JAVA with Selenium library driving headless Chrome instances ( download driver ) and JSoup as parser to fetch data of the acquired HTML.

A while back I wrote a post on how to scrape web pages using C# and HtmlAgilityPack (It was in May? So long ago? Wow!). This works fine for static pages, but most web pages are dynamic apps where elements appear and disappear when you interact with the page. So we need a better solution.

Selenium is an open-source application/library that let’s us automate browsing using code. And it is awesome. In this tutorial, I’m going to show how to scrape a simple dynamic web page that changes when an element is clicked. A pre-requisite for this tutorial is having the Chrome browser installed in your computer (more on that later).

Let’s start by creating a new .NET core project:

Web Scraping Using Selenium Python

To use Selenium we need two things: a Selenium WebDriver which interacts with the browser, and the Selenium library which connects our code with the Selenium WebDriver. The best apps for mac os. You can read more in the docs. Gladly, both of them come as NuGet packages that we can add to the solution. We’ll also add a library that provides some Selenium extensions:

Selenium Web Scraping Tutorial

One important thing to note when you install these packages is the version of the Selenium.WebDriver.ChromeDriver that is installed, which looks something like this: PackageReference for package 'Selenium.WebDriver.ChromeDriver' version '85.0.4183.8700'. The major version of the driver (in this case 85) must match the major version of the Chrome browser that is installed on your computer (you can check the version you have by going to Help->About in your browser).

Slicex vst for mac. To demonstrate the dynamic scraping, I’ve created a web page that has the word “Hello” on it, that when clicked, adds the word “World” below it. I’m not a web developer and don’t pretend to be, so the code here is probably ugly, but it does the job:

I added this page to the project and defined that the page must be copied to the output directory of the project so it can be easily consumed by the scraping code. This is achieved by adding the following lines to the DynamicWebScraping.csproj project file somewhere between the opening and closing Project nodes:

The scraping code will navigate to this page and wait for the heading1 Download switch for mac. element to appear. When it does it will click on the element and wait for the heading2 element to appear, fetching the textContent that is located in that element:

Web Scraping Tools

Let’s build and run the project:

The program opens a browser window and starts to interact with it, returning the text inside the second heading. Pretty cool, right? I have to admit that the first time I running this it feels really powerful, and opens a whole new world of things to build… If only I had more time :-).

Selenium Web Scraping Java

As always, the full source code for this tutorial can be found in my GitHub repository.

Hoping that the next post comes sooner. Until next time, happy coding!